The SCAMP kernel

Sat 27 February 2021Tagged: cpu, software

You might think it's a bit early to be working on an operating system, given that I don't have a CPU to run it on. Maybe you're right. But working on software is easy and working on hardware is time-consuming, so here we are.

The current state of the kernel is that it can read files from a simple filesystem stored on an emulated block device, exposing a roughly Unix-like API (open()/read()/write()/close()), and assign file descriptors. It can also use the filesystem API to load code from the disk and execute it as a user program via the exec() system call.

It can't yet write to disk, and opendir()/readdir() are still unimplemented, as is almost everything else, but I am making relatively good progress and with any luck the kernel will be feature-complete pretty soon. Once the filesystem is done, there's not actually that much more to add.

I'm not quite sure it's considered correct to call it a "kernel", given that the SCAMP CPU has no memory management unit and no concept of user mode vs kernel mode. I'm calling it a "kernel" anyway in the absence of a better term.

Currently, the boot process is as follows:

- the bootloader in ROM loads the kernel from the start of the disk and jumps to it

- the kernel initialises the block device and file descriptor table, and calls exec(["/bin/init"])

- exec() uses the filesystem API to read /bin/init into memory starting at 0x100, and then jumps to it

- init reads /etc/motd and writes it to the console

- init halts, in the absence of anything else to do

Eventually init might be replaced by a shell script so that it can be more conveniently changed. The ultimate goal of init is to start a shell, but I haven't got that far yet.

Here's an asciinema recording of the SCAMP system booting in the emulator, with an emulated clock speed of 500 kHz. I don't actually know what clock frequency the real hardware will be able to run at, but I hope 500 kHz is achievable.

(The jerky motd output is because it reads a block, writes it to the console, read the next block, writes it to the console, and so on - there's a pause in between each block).

The kernel is mostly implemented in SLANG, with inline assembly code where necessary. I expect more of it will turn into inline assembly code when the practical bottlenecks are identified. You can read the code in the os/ directory of the git repo. Or try it out if you can work out how to build it! I'll help you if you want.

The kernel is about 8K words of code, while init is 3K and /etc/motd is about 5K. You may therefore be surprised that in the screen recording, the kernel loads in about 2 seconds while init doesn't produce any output for about 10 seconds, and then seems to very slowly write the contents of the text file. The reason is that the bootloader loads the kernel with a short, special-purpose piece of assembly code, while the rest of it is both more general, and written in SLANG. I expect it won't be hard to improve the SLANG parts, but I don't want to waste time on it yet.

Executables work the same way as in CP/M. The contents of a file are loaded into memory starting at 0x100 and then jumped to. There's no header on the file, and no support for dynamic linking etc., it just contains raw memory contents.

The OS design is kind of a bastard child of CP/M and Unix. It has a Unix-like system call API and filesystem layout, but CP/M-like capabilities. It has the simplicity that I love from CP/M, and the API that I love from Unix. But is also worse and less capable than both. Swings and roundabouts.

System calls

Each system call is accessed by calling a function pointer in a table stored just below the pseudoregisters (i.e. addresses up to 0xfeff). exit()'s pointer is stored at 0xfeff, so to call exit(0) in assembly language, something like this would work, where parentheses indicate indirection:

push 0

call (0xfeff)The system call references is in doc/SYSCALLS.md. Most of them are not implemented yet, I'm mostly working my way through implementing them in the order that I come across a need for them.

Compared to the normal Unix system calls, the main surprising differences are:

- system() is a system call instead of a wrapper around fork()/exec(): this is part of my plan for suspending the running process and replacing it with a child

- fork() is missing: we won't support it

- ioctl() is missing: so far I have no use for it, but it might appear eventually

- sbrk() is missing: this is implemented in "user space" instead, since the user program always owns all of the memory, up to the start of the kernel

- dup() is missing: there's a comparable but better system call named copyfd() (which is actually roughly the same as dup2(), maybe I should rename it)

- we gain an osbase() call that reports the start address of the kernel, and a cmdargs() that supplies command-line arguments

And besides that, anything related to networking, pipes, user accounts, file permissions, date/time, IPC, and signals doesn't exist because these concepts don't exist.

Filesystem

The filesystem is the vast majority of the kernel. Having implemented some of it, it has become very clear why DOS is called a "disk-operating system". Operating the disk is basically all you need to do.

Fitting the theme of the rest of the project, the filesystem is about the simplest workable solution I could think of. It assumes a 512-byte block size, which I know to be correct for SD cards, and I expect won't be too much of an impediment for CompactFlash. It supports 16-bit block numbers, which means up to 65536 blocks or 32 MBytes.

Blocks 0 to 63 are unused: these are available for the kernel to be stored in. I don't expect to need a full 32 KBytes for the kernel, but I at least shouldn't ever have to relocate the filesystem.

The next 16 blocks are a free-space bitmap for the entire disk (including the first 80 blocks, for sake of simplicity). Each block on the disk is represented by 1 bit in the free-space bitmap. When a new block is needed, the free-space bitmap is linear-searched until a free space is found.

Block 80 (the root directory block) and onwards are the filesystem contents. Each of these blocks begins with a 4 byte header encoding:

- (6 unused bits)

- 1 bit: block type: directory=0, file=1

- 9 bits: length of block contents

- 16 bits: "next" block pointer

When the contents of a file or directory are too long to fit in 1 block, the rest of the contents are in the block pointed to by the "next" field, forming a linked list of blocks. A "next" pointer of 0 indicates that this block is the last one for the file.

A directory block contains 15x 32-byte directory entries, with 28 bytes wasted at the end of the block. (Maybe I should change this to 33-byte directory entries to gain an extra character in filenames for free.)

A directory entry is just a filename (1 to 30 bytes) and a block pointer (2 bytes). There is no support for symlinks, reference counting, permissions, atime/ctime/mtime, etc. as these things are not necessary. Unused directory entries are indicated by having an empty filename.

I think the filesystem is a substantial improvement over the CP/M filesystem, because it supports directories, supports longer filenames than 8-dot-3, and supports files with a content length that is not a multiple of the block size. In CP/M, text files that don't end on a block boundary are suffixed with a load of ^Z, because ^Z means EOF in CP/M. That said, my filesystem API only exposes word-aligned access to file contents, which means files with an odd number of bytes will probably end up with a spurious trailing 0 byte anyway...

Exception handling

longjmp is my goto.

I am quite fond of the kernel's exception handling mechanism. Each system call that needs to handle exceptions starts with:

var err = catch();

if (err) return err;catch() returns 0, but if anything, anywhere down the call stack, subsequently calls throw(err), then the err value will magically pop out of catch() and the system call will return the error to user space. Pretty neat, no?

It is based on the setjmp()/longjmp() "nonlocal goto" mechanism from C.

You give setjmp() a pointer to a "jmp_buf", which stores information about the calling environment (stack pointer, return address, other registers etc. depending on the system). On the first call, setjmp() returns 0.

When you subsequently call longjmp() with the same jmp_buf, it restores the saved state and returns a nonzero value back to the place that first called setjmp(). It acts exactly as if control had magically transferred back to setjmp(), which then returns a new value.

I love the idea of longjmp() but have never had a substantial excuse to use it before. The implementation is surprisingly straightforward, although there are some caveats:

- if you try to use longjmp() to return control to a function that has already returned, then that function's stack frame is gone, potentially replaced with anything, and it will fail in surprising ways

- (in SLANG, but not necessarily in C) if you try to use setjmp() inside an expression (e.g. var n = 10 + catch()), then the other parts of the expression that should have been pushed onto the stack will not be there any more and it will fail in surprising ways

The man page says:

While it can be abused, the traditional C "goto" statement at least has the benefit that lexical cues (the goto statement and the target label) allow the programmer to easily perceive the flow of control. Nonlocal gotos provide no such cues: multiple setjmp() calls might employ the same jmp_buf variable so that the content of the variable may change over the lifetime of the application. Consequently, the programmer may be forced to perform detailed reading of the code to determine the dynamic target of a particular longjmp() call. (To make the programmer's life easier, each setjmp() call should employ a unique jmp_buf variable.)

Adding further difficulty, the setjmp() and longjmp() calls may not even be in the same source code module.

In summary, nonlocal gotos can make programs harder to understand and maintain, and an alternative should be used if possible.

Even though my use case involves multiple different catch() calls with the same jmp_buf, and even though the catch() and throw() calls are not always in the same source module, I don't think it's too hard to understand. throw(err) always passes an err to the top of the current system call.

There is a slight footgun, in that if I forget to call catch() in a system call that can trigger a throw(), then we're in the case of "trying to return control to a function that has already returned" (i.e. the last system call that did call catch()), and it will fail in surprising ways. I'll just try not to do that.

Kernel profiling

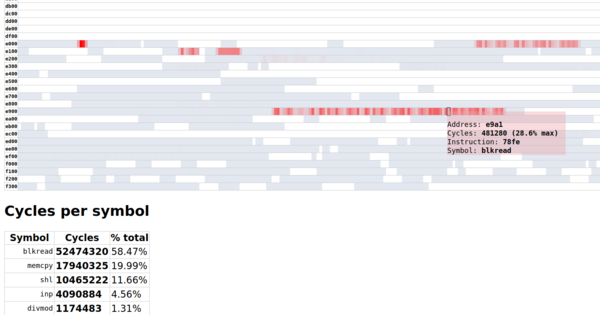

Profiling the kernel works just like any other program: run it in the emulator with -p, and look at the generated HTML report.

Since I last wrote about the profiling tool, I have added a killer new feature: it now resolves addresses to symbols!

The report generator can now take in the annotated hex output produced by the assembler, extract symbol names from the comments, and annotate memory addresses with the symbol they are associated with:

This basically automates the task of finding a hotspot in memory, looking at the address, then searching through the annotated hex to find out which function is resident at that address.

It knows how much time is spent executing each function, and gives a breakdown of how many cycles were spent in each one, which is really useful for directing optimisation efforts. In the screenshot above, you can see that 58% of execution time was spent inside the blkread() function, which probably means that is a prime candidate for reimplementing in assembly language.

Just by looking at the length of "red" addresses in blkread(), you can see that it's not reading data very efficiently. In the ideal case, reading a 512-byte block from the block device would execute a single in instruction 512 times, but it looks like the function is doing quite a lot of work besides that.

Similarly memcpy() is used a lot and would benefit from a more efficient implementation.

If you want, you can look at the current blkread() and memcpy() implementations. Neither of these implementations are inherently bad, it's just that the compiler is a bit of a blunt instrument at the moment.

Bad bits

Currently the block device and serial port don't actually represent any real hardware, they're just placeholders that allow me to develop the rest of the system using the emulator. Eventually I do want it to work with real hardware, and at that point I'll probably make the emulator match the hardware.

In Unix, IO devices are represented by device files like /dev/ttyS0, and if you want to write to a particular device, you can open the device file to get a file descriptor for it. I don't think I want to bother supporting device files, because the only devices I'll have are the block device for the filesystem and the serial ports. So for now I am just hard-coding file descriptor 3 to be the serial port for the console. For example, to explicitly connect stdin/out to the serial port, you can use the copyfd() call:

copyfd(0, 3); # stdin on fd 0

copyfd(1, 3); # stdout on fd 1Similarly, you can connect stdin/out to a file on the disk by using copyfd() with the file's fd instead of 3.

Currently the kernel and the user space library both contain identical implementations of common functions like memcpy() etc. There's not a lot of point wasting memory on 2 copies of the same function, so I'd like to come up with a good way to share these. Perhaps I should just make them into system calls. The system call overhead is precisely the same as that of a normal function call, so this wouldn't incur any performance penalty. The only downside is that it means you need to rebuild the kernel if you want to change the implementation. Although the upside is that all the user space programs get the improved version for free without being recompiled.

If you like my blog, please consider subscribing to the RSS feed or the mailing list: